Using SaaS-based digital tools to deploy surveys have afforded experiential marketers a host of luxuries:

· Easier data analysis

· Real-time feedback

· The ability to correct that pesky grammar mistake that was overlooked

· Elimination of tedious data entry

While we should embrace these conveniences, we cannot overlook the fact that survey design is very much a methodical process rooted in procedure, and making changes once a survey has been deployed has implications on the sterility of your data. (Or, put another way, a strictly-SaaS tool cannot exercise the same level of strategy as a human being who is skilled in survey/research methods.)

Here are five simple pass/fail tests you should considerbefore making a survey change:

1) Is the change aesthetic?

By “aesthetic,” we’re referring to traditional grammar and spelling errors (apologies to our dear English teachers, we know there’s more to good grammar than aesthetics), graphic/logo updates, fonts/font sizes, and even putting questions on separate pages.

In most cases, aesthetic changes are perfectly fine and won't ruin the integrity of the survey instrument (so long as workflows/branching are not mistakenly viewed as an aesthetic change -- see #3 below).

2) Am I changing *how* questions are asked?

One should scrutinize closely a change in the wording to a question or its response. Consider this example:

“What is your annual income?” versus “What was your total household income last year before taxes?”

Both questions should deliver a truthful response, but the manner in which the second one is worded should illicit a more consistent and precise response across the audience.

Making wording changes like this after the survey has been deployed almost certainly will lead to an analysis that is “off,” which means you’d be using inaccurate data to make business decisions!

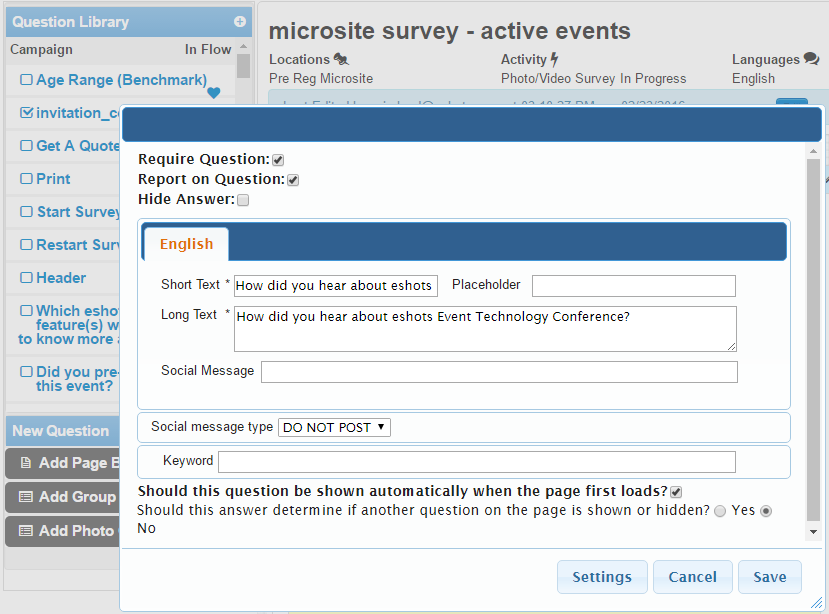

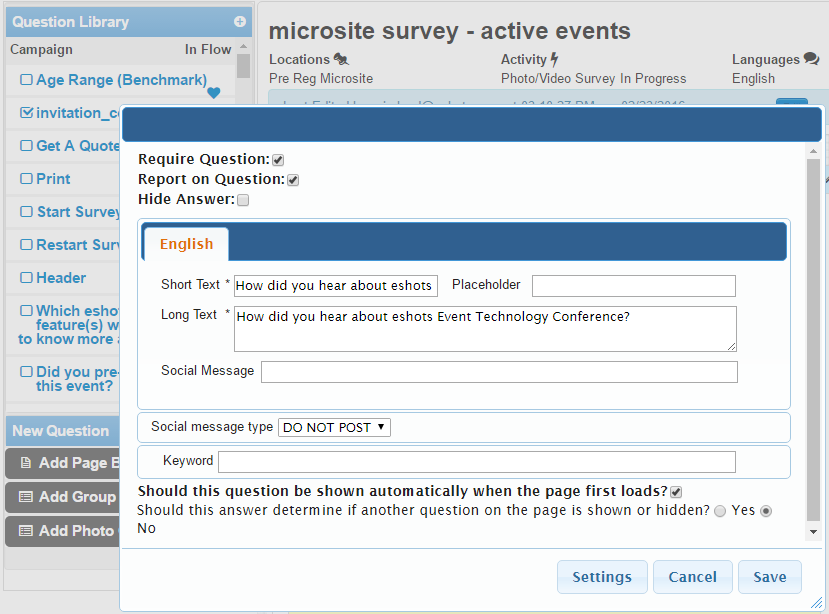

3) Will survey branching logic be modified?

As if the consideration of branching logic/flow isn’t enough of a chore when building a survey from scratch, what can be even more stressful is editing it once deployed.

Making changes to branching once a survey has been launched can break downstream business rules for data transfers, reports, sales matching, and CRM.

This is not to say it cannot be done, but there better be a very good reason for doing it. You should consult with key stakeholders, and involve QA to test and verify downstream impact to the survey and resulting data.

4) Will questions or answers be added or deleted?

Again, careful consideration and validation is needed to assess how this will impact any branching or skip logic designed into your survey to make sure a branch isn’t orphaned. But even more importantly, consideration needs to be given if these changes will impact overall data trends when comparing results from event to event or year over year.

5) Do other events or activations use the same survey?

If so, depending on how your campaigns are set up, the change could impact other concurrent events, or worse – create disparity in the data collected so that results cannot be compared across events.

Understandably, there are many moving components to events. Continuously changing surveys and the resulting data, however, will skew results. To avoid these issues, we recommend having one central owner for survey design to govern business rules and insure downstream process and reporting trends will not break.

The eshots’ client services team is well-versed in the many risks associated with making survey edits; it’s a common request that they handle which frees up capacity so our clients can focus on the ever-changing brand requests, staffing, and logistical demands of activating brand mobile tours and events.